Is a picture worth one thousand words to a computer? How does a computer understand an image? Can a computer count the items in a visual image? These are the kinds of questions that inspire Paola Cascante-Bonilla, one of the Ph.D. student researchers in Rice University’s Visual Language and Learning (VisLang) Lab.

“The idea behind getting a computer to answer questions about visual images — commonly referred to as visual question answering or VQA — came from natural language processing research, where you present a text to the system and ask the system to answer questions about it. This is a very broad reasoning task,” said Cascante-Bonilla.

“So what would it take to get a system to answer questions about an image instead of a text? Now we are looking at two different problems: computer vision, which is how a computer interprets images, and natural language processing, which allows users to ask questions without having to first program them into the computer’s language. This is a very interesting task, with applications in areas like accessibility and robotics.”

Although VQA research is not new, Cascante-Bonilla has chosen a novel approach. She said the existing datasets used to train computers to answer image-related questions were created for different research purposes and later adopted for VQA training. What Cascante-Bonilla envisioned were datasets created specifically to train VQA models, datasets in which items in the images could be manipulated.

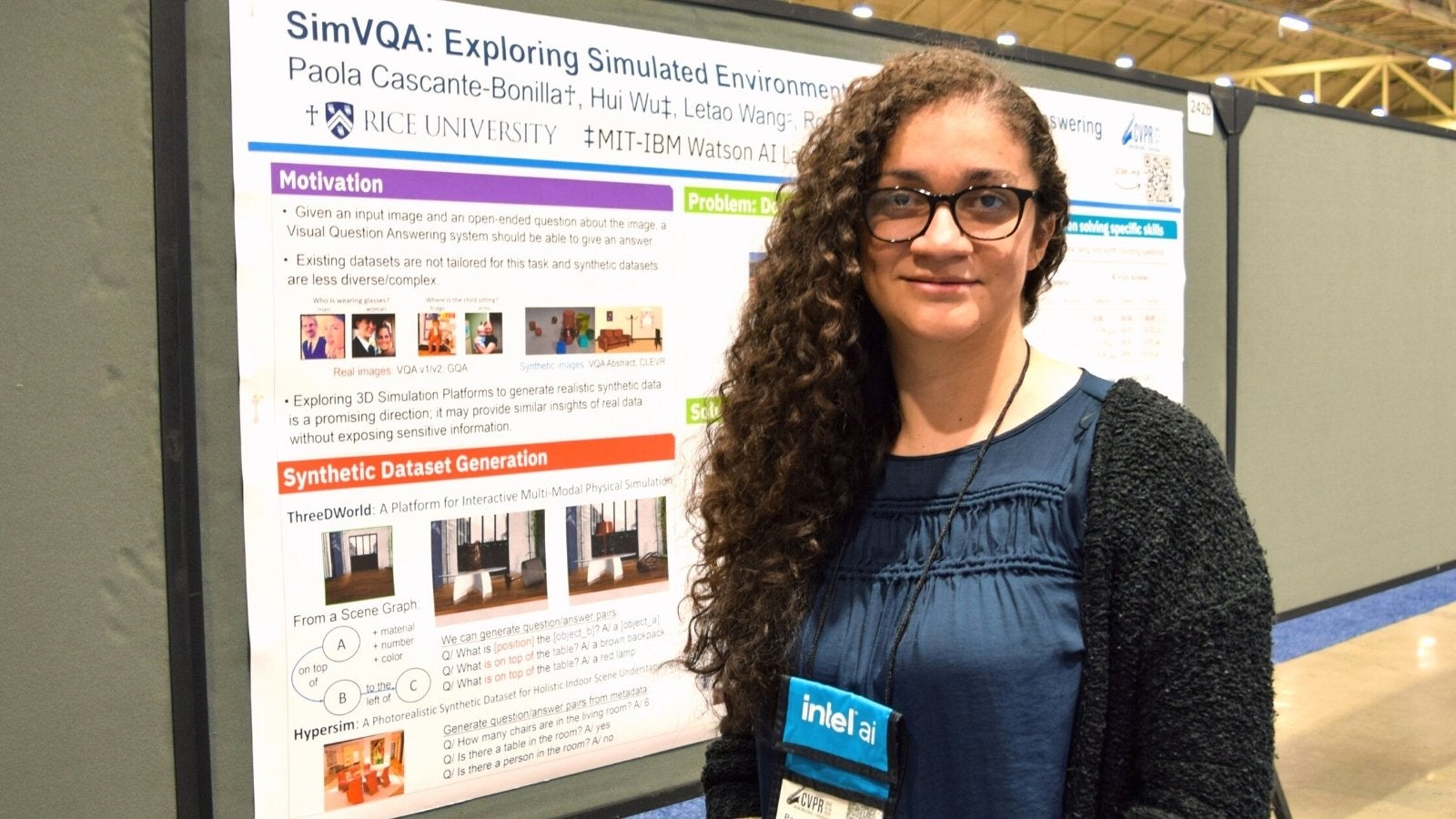

“If the researcher has full control of the scene, like being able to move a bowl or a purse around inside the image and keep asking questions about the image, that would be really cool,” she said. “Computer generated images have become increasingly realistic and there are some powerful tools out there, so we went out and explored some of them. Using a graphics engine called ThreeDWorld, we generated images by simulating a variety of scenarios to create our new data set, SimVQA.”

“Now we have these photo-realistic and controllable data sets where you can place objects all over the scene or space. Then you create questions and answers about the scene, change up the scene and run the questions again. If we scatter overlapping scissors all across the floor of our scene, it might be difficult even for a human to count, but the system has to figure it out.”

Cascante-Bonilla then identified a gap between images of the real world and images that had been simulated. The VisLang Lab researchers prefer simulated images because these don’t expose personal information and are better for privacy protection. Cascante-Bonilla said the simulations in SimVQA were already good, but the team wanted to replicate additional real world characteristics.

“We developed a method we call F-Swap that allows us to look at all the representations of all the images in the scene and switch them around and swap them out. For example, you have two images of rooms with chairs in them. In one, the chair is in the middle of the scene. In another the chair is on the left side of the scene. One is a real world image, and one is a simulated room. We were able to take the representation of the real chair and move it into the simulated scene and take the simulated chair and move it into the real world image. That helps us align our training system so that it doesn’t become biased to only simulated chairs or only chairs from real world images. We’re very proud of F-Swap!”

Another problem Cascante-Bonilla identified was low regime data. Most VQA training models include many questions such as color or material, if an item is in a scene or not, or if a person is standing or sitting. The existing training models contain few counting questions, so Cascante-Bonilla began writing subsets of counting questions — how many chairs in the room, how many people, etc. for her training model. As the new subsets were incorporated, the system began more accurately answering counting questions.

“The counting skill is really difficult,” said Cascante-Bonilla. “Typically, researchers choose what we call foundation models that execute the training by using a lot of data. But we noticed the system still struggled to count objects accurately. So we took all the questions and answers, pulled out the existing counting questions, added in our own subsets, and our results improved.”

Vicente Ordóñez, director of the VisLang Lab and Cascante-Bonilla’s Ph.D. advisor, said the SimVQA work she presented this summer at the 2022 Computer Vision and Pattern Recognition Conference (CVPR) showcases the next phase of work begun in her internship and post-internship collaboration with the MIT-IBM Watson AI Lab.

In three years, Cascante-Bonilla has published four papers as the lead author and a fifth as a co-author. Ordóñez is enthusiastic about Paola's publications and her presentations at prestigious conferences because each paper represents high quality, innovative research that advances their field.

“Paola continues to build on our earlier work,” said Ordóñez. “All the works are aiming to bypass the need for machine learning and computer vision models requiring large amounts of manual annotated data. There are different types of questions in VQA, like how many chairs are in a room, how many people are sitting or standing, or how many glasses of wine are full.

“My lab has worked on processing problems for a very long time. Now with VQA, we add in the complexity of computer vision. Generally speaking, how will we teach a machine to intelligently complete the task, answer the question, and block out the inconsequential details in the image? Paola’s solution was to use simulations — hence the name, SimVQA.”

Ordóñez is interested in training computer vision and machine learning models with less annotated data than current models require. He said scientists and their computers cannot scale up indefinitely. Working against the looming scale limit, they strive to teach their intelligent systems to reason about the world in every situation.

He said, “In the past, Paola developed a method using pseudo labeling. That is when we borrow labels from annotated data and apply them to unlabeled data in order to teach the system to reason about objects where little information exists. Paola is now moving in a parallel direction. Instead of borrowing labels, she is simulating new samples using a graphics engine and that is the work she presented at CVPR this summer.

“Our group has a lot of experience in computer vision and in natural language processing research. We’ve been quite successful at pushing forward a research program that explores problems in these two areas and we present at conferences in both fields, as well as at machine learning conferences. In fact, the opportunity to expand our research beside machine learning faculty who are working on adjacent areas to train intelligent systems was one of the reasons I and my colleagues were attracted to Rice.”