Massive, cloud-based systems demand increased efficiency. Rice PhD graduate Weitao Wang’s paper outlines a critical way to improve how these systems run by allocating weighted bandwidth.

Weighted bandwidth and the hardware problem

Think back to the last time you used public WiFi at the coffee shop or airport. If it was crowded, something as simple as checking your email probably had excruciating lag time. But what if you and everyone else on the network got the exact bandwidth they needed for whatever they were doing?

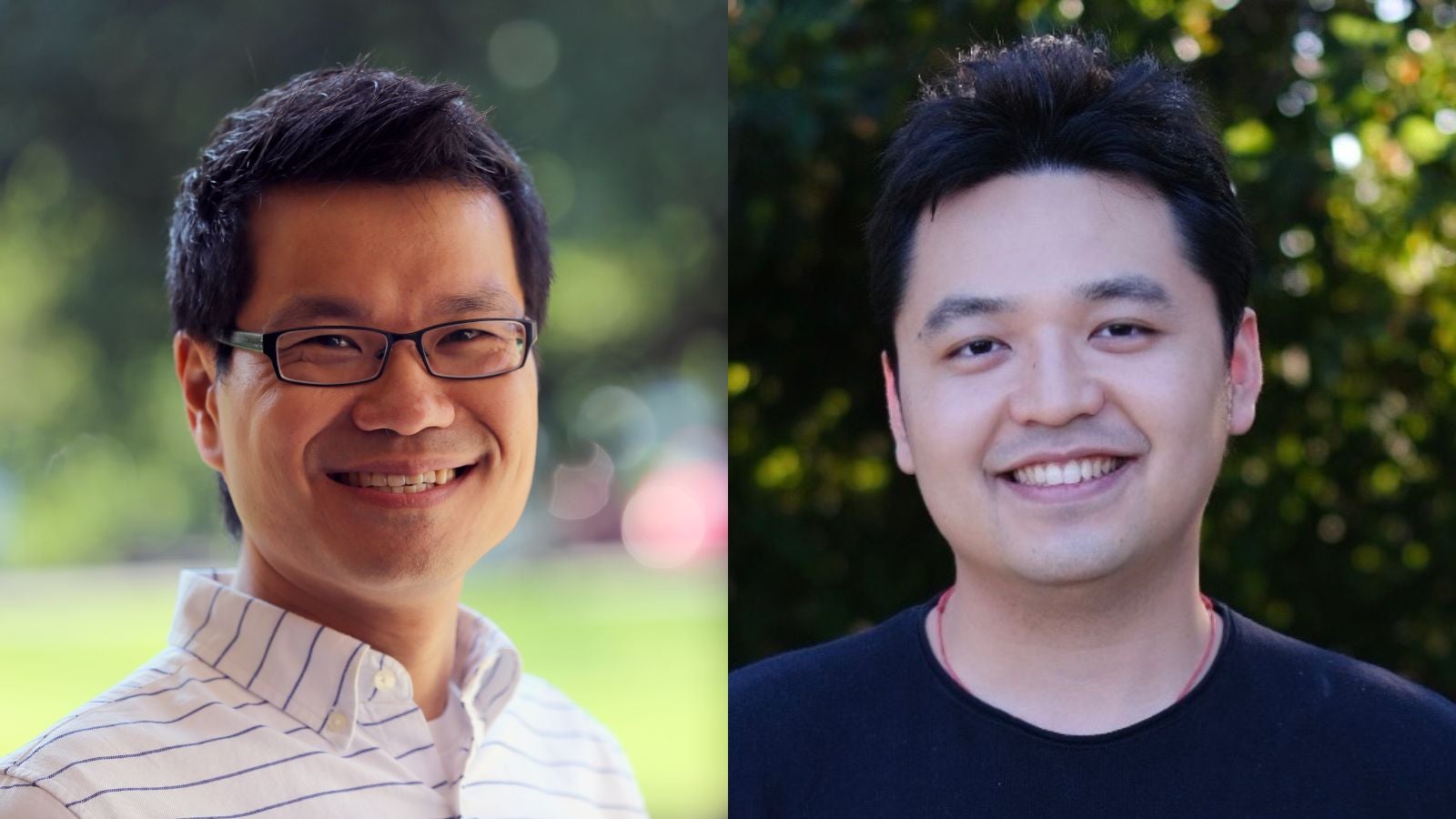

Söze, an algorithm created by joint research between Wang and Rice Computer Science and Electrical & Computer Engineering Professor T. S. Eugene Ng, does this at a massive scale. Using existing network technology and capabilities, it can enable weighted bandwidth allocation in cloud systems for the first time.

For cloud networks with hundreds of thousands of machines, weighted bandwidth adjustment normally requires installing prohibitively expensive equipment. Söze bypasses the need to install new hardware entirely by using data that network switches already collect and send back for analysis. This data, or telemetry, records information about how the network operates to track performance and identify problems in the system.

“We figured out a new approach that takes very little help from the network. In the title, we say ‘one network telemetry is all you need,’ and that’s basically the idea,” said Professor Ng.

How Söze works

The specific telemetry that Söze uses is called congestion signaling, which tells the network how crowded certain network points are by recording how long the queue is to pass through them. This information is included with each data packet sent back to the server.

Söze takes congestion signaling data and adjusts traffic at each network switch independently until the system as a whole reaches a set convergence point—that point being the desired bandwidth. And Söze can do this without additional aid from topology or routing data. Since it operates at so many independent points in the network, it’s flexible enough to adjust both bandwidth within an application and throughout the cloud to whatever the user specifies.

Söze’s key function is helping applications prioritize the “critical path,” the longest sequence of events an application must complete in sequential order to finish a set task. The application can operate faster and more efficiently by identifying that path and weighting its bandwidth accordingly, similar to your home network devoting more bandwidth to your Netflix movie than your open browser tab.

Since this is the first time cloud-based systems will be able to use weighted bandwidth allocation, Wang hopes large cloud providers like Amazon AWS and Google can bring it to their customers.

“The reason I chose this research direction is that I know that this is a problem [in industry settings] and I tried to find a decentralized instead of a centralized way to solve it to make it work for a large-scale environment,” said Wang.

This isn’t the first networking breakthrough from Rice’s Computer Science Department—another research team recently developed an energy-efficient power amplifier that could speed up wireless networks while cutting their energy consumption.

Wang will present his paper, titled Söze: One Network Telemetry Is All You Need for Per-flow Weighted Bandwidth Allocation at Scale, this July at the 19th USENIX Symposium on Operating Systems Design and Implementation (OSDI) in Boston, MA.