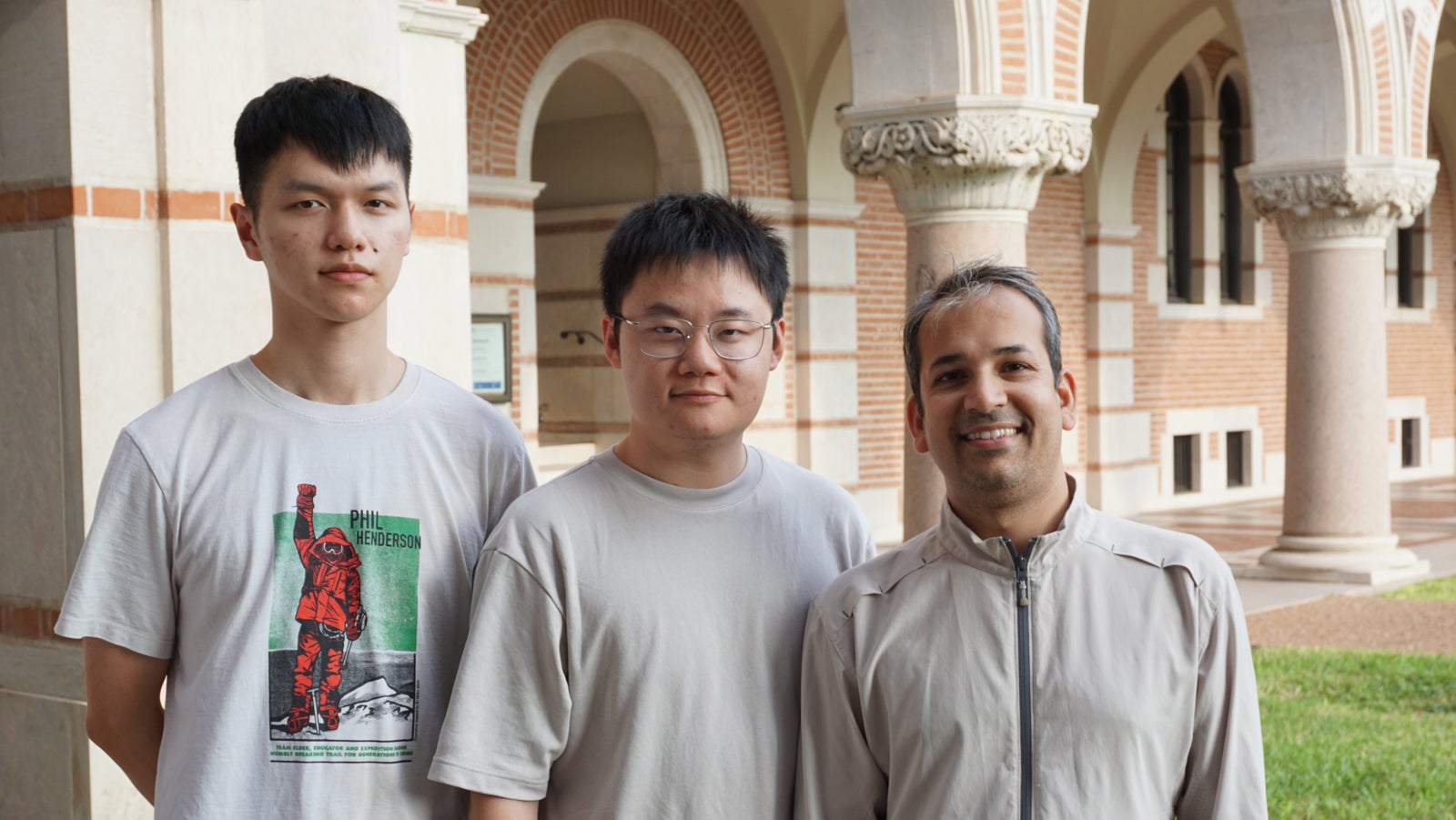

Rice University Computer Science Associate Professor Anshumali Shrivastava leads an active research team with members publishing and presenting papers at flagship conferences like ICML, ICLR, UAI, AISTATS, and RecSys each year. In 2023, their work was featured at Carnegie Mellon University as well as in Hawaii, Rwanda, Spain, and Singapore. The foundation of the lab was laid when Shrivastava predicted the future growth of artificial intelligence (AI) models and shifted his research in that direction.

“Before large learning models existed, I was a mathematician by training and focusing my Ph.D. work on some fundamental ideas to scale up machine learning to very large parameters,” said Shrivastava.

His Ph.D. collaboration to improve the efficiency of a certain kind of search and sampling was recognized as the best paper presented at NeurIPS 2014, the top conference in neural information processing systems. This work and all the tools Shrivastava developed in his Ph.D. years proved beneficial when he shifted his focus to the science of training large neural networks at Rice.

He said, “Deep learning was at its prime when I arrived at Rice; as my research progressed, I began to realize the algorithms dated back to the ‘80s when efficiency and scale were less relevant than validating a working approach. But by 2015, scale had become a significant concern and I believed the main idea behind our NeurIPS paper held the key to this problem.

“At that point, it was enough of an obsession for a scientist, and that became my major focus–one I haven’t gotten over yet! In fact, I am more into it than I was ever before; it just keeps growing.”

Early connections with Amazon lead to long-term collaboration

Shrivastava spent a sabbatical semester with Amazon’s search and ad team, then called A9. He said it was an eye opening experience and he felt fortunate to work with a team whose main focus was engineering and not just pure research.

“Pure research was part of it; that’s what you do at sabbatical, you try to go out of your comfort zone,” he explained. “But I had seen enough of the rigor in research, and wanted to develop the rigor of executing. Running AI in production is a completely different ball game. I could see firsthand how machine learning and AI are deployed in production: the engineering required, the problems it solves, its problems, the missing piece.

“This opportunity gave me a sense of the complete lifecycle of AI and deep learning inside resource-heavy projects. There was a wide gap between building prototypes in AI for research papers and actually engineering the solution in real practice. Don’t get me wrong, prototypes are good for training and education purposes, but it is far far from the real world. Clearly, this was another stepping stone for me to get obsessed with the practice of machine learning and my desire to get out of mere prototypes.”

In 2022, Shrivastava’s on-going collaboration with Amazon yielded ROSE, the robust cache behind every product search on Amazon.com.

Research leads to entrepreneurship

Shrivastava and a Rice ECE Ph.D. alumnus, Tharun Medini, were researching large scale neural networks when they designed a new algorithm centered around a concept called dynamic sparsity. In 2018-19, they collaborated with partners from Intel and showed their models for simple CPUs could outperform the top GPUs, which sparked industry interest.

“But when we looked at the existing software stack for AI and how convenient it was to prototype, I realized that it would be difficult for people to break out of their comfort zone in order to build something fundamentally different using our model. So in 2021, Tharun and I went all in and co-founded ThirdAI,” said Shrivastava.

“Our first cohort of engineers were some of the smartest Rice undergraduates, all CS majors that were graduating at that time. When presented with the idea of building ThirdAI, they were so excited that they turned down generous offers from Silicon Valley companies like Databricks, Facebook, and Google!”

His work with Amazon and ThirdAI revealed ‘two arms of AI’ to Shrivastava. He said the pursuit of ideas is quite different than developing software but they are equally critical. Now he uses his experiences to teach his Ph.D. students how to channel their research agendas towards what matters in industry as far as realistic software development goals. Mirroring that mentorship, his students produced research results with practical outcomes including:

Efficient Value Iteration Algorithms for Solving Linear MDPs with Large Action Space

In April 2023, Valencia, Spain hosted one of the earliest flagship events of the year, the International Conference on Artificial Intelligence and Statistics (AISTATS). There, first author Zhaozhuo Xu presented his team’s Markov Decision Process (MDP) recommendation system research paper intriguingly titled, “A Tale of Two Efficient Value Iteration Algorithms for Solving Linear MDPs with Large Action Space.”

Xu collaborated with Shrivastava and Zhao Song at Adobe Research to explore a reinforcement learning (RL) problem: an abstraction of the cold-start problem in recommendation systems. “Cold-start refers to when a new user appears with zero previous interactions with any item (news or product). An illustrative example would be the classical multi-arm bandit problem, where pulling each arm will give you a different reward. In practice, the number of items on e-commerce websites is in the millions or billions. But the current value iteration algorithms have to go over all items in every iteration in optimization, which is infeasible in practice.”

Xu said he was motivated to develop an interactive optimization algorithm by connecting theory and practice. Theoretical analysis focuses on reducing the number of iterations to reach the best result, which increases the cost of each iteration. Practitioners develop data structures and algorithms to improve running time.

“The novelty of RL combined with locality sensitive hashing (LSH) is that it provides a practical solution to improve the real-world running time of the iterative algorithm. The flexibility of LSH gives us opportunities to balance between accuracy and efficiency. As a result, we can maintain a similar number of iterations to converge while reducing the iteration cost,” said Xu.

“Before applying to Rice for my Ph.D. work, I had become interested in randomized algorithms. Professor Anshu is definitely the superstar in this area, making significant contributions to both theory and practical development. Working closely with him at Rice, I’ve been even more impressed by his passion and vision for the future of AI.”

Learning Multimodal Data Augmentation (LeMDA) in Feature Space

Zichang Emma Liu is the first author of a paper presented in May 2023 at the International Conference on Learning Representations (ICLR 2023) in Kigali, Rwanda. In “Learning Multimodal Data Augmentation (LeMDA) in Feature Space,” the team has devised improvements for neural networks harnessing the multiple modalities of text, images, and tabular data.

“The information we process daily comprises different modalities such as vision, language, and audio,” said Liu. “Imagine watching a film with no sound or subtitles. To really understand and reason, it is important for intelligent agents to jointly leverage information from multiple modalities. Common modalities also include signals such as LiDAR and EEG data.

“Data augmentation has been an extraordinary impact in single modality settings. But augmentation has rarely been explored in multimodal settings – after augmenting the caption of an image, it may no longer accurately describe the image. Multimodal deep learning has various applications like analyzing content to identify hateful speech, creating new content with generative AI, and predicting pet adoptions or eye movements. We hope that LeMDA will spark the community’s interest in further studying data augmentation in multimodal setting.”

Deja Vu: Contextual Sparsity

Liu also is the first author of Deja Vu, a paper demonstrating how sparsity can be used to reduce the high computation expense of large language models (LLMs). She presented the research in July 2023 at the International Conference on Machine Learning (ICML) in Honolulu, Hawaii and said their work was inspired the previous year during the Machine Learning and Systems (MLSys) conference.

“Our lab had previously worked on inference efficiency in a different setting, and our results were presented at MLSys 2022. During the conference, we had a lab dinner with several of Anshu’s recent alumni, including Ryan Spring at NVIDIA and Beidi Chen at Carnegie Mellon University. While discussing news and publications relevant to our research, we talked about Meta’s 175 billion parameters language models and realized there could be a huge opportunity here.” said Liu.

Sparsity has been observed in a variety of deep learning networks for decades and felt like a natural research focus for Liu. She said, “For me, the interesting problem is how to smartly leverage sparsity to achieve efficiency.”

Several LLMs like ChatGPT received a lot of attention in 2023, but Liu’s team was already working on their sparsity research when the latest LLMs began making headlines in the spring. “The scale of GPT3 amazed us with its promising performance. At a closer look, we noticed the huge computation requirements. For such an amazing model, inference efficiency will become the real blocker for widely applying it to various tasks. Large scale models give us a huge playground in which to demonstrate what a smartly-designed algorithm can do,” said Liu.

Hardware-aware Compression with Random Operation Access Specific Tile (ROAST) Hashing

Another July 2023 ICML paper from the Shrivastava lab was presented by first author Aditya Desai, who collaborated with his advisor and Keren Zhou, an award-winning high performance computer researcher and recent Rice CS Ph.D. alumnus. Their solution, “Hardware-aware Compression with Random Operation Access Specific Tile (ROAST) Hashing,” introduces a hardware-aware compression model that is both model-agnostic and cache-friendly to help reduce the cost of training and deploying large models. This work expands on the team’s ROBE research, which earned Desai the MLSys Outstanding Paper recognition in 2022.

Desai said, “Machine learning is the new electricity. It is going to change the landscape of automation in ways we have not yet imagined. However, we’re seeing a consistent trend of increasing resource requirements including computation and memory. To achieve the broadest impact, machine learning models need to be deployable on devices with much stricter resource constraints. Model compression has a direct impact on the use of this technology and that is why I decided to invest my time in solving the challenge.”

Image and text processing applications on mobile devices are examples of large models that must work within the device’s resource constraints. Desai said, “Everytime you open your phone to text, the next word suggestions are produced by text processing models. Face recognition is a common image processing application that is used for security purposes and is improving with the development of new ML models. With ROAST, we can efficiently train and deploy the model using a much smaller memory footprint (~10-100x lesser) in text and image classification tasks.”

He and his colleagues focused on hash functions, simple and lightweight functions with independence properties that make them suitable for model compression. “In ROAST, we use hash functions to redirect memory access, causing reuse of model parameters across the models. This provides an efficient and effective method to reduce the memory footprint of the model.”

Graph Self-supervised Learning via Proximity Distribution Minimization

On the heels of July’s ICML conference in Hawaii, the Shrivastava Lab presented a new paper in Pennsylvania at the Association for Uncertainty in Artificial Intelligence (UAI), hosted at Carnegie Mellon University in early August 2024. Tianyi Tony Zhang took to the podium as the first author of their research paper on improving graph self-supervised learning tasks while simultaneously achieving higher memory efficiency.

“Self-supervised learning (SSL) techniques –such as contrastive learning for computer vision and masked language modeling for natural language processing– have propelled the development of many machine learning areas,” said Zhang. “However, SSL for graphs has remained a challenge due to unique properties of graph data, such as being non-euclidean and having variable degrees.”

The goal of SSL for graphs is to learn meaningful representations that capture the underlying semantics of the graph structure and vertex information, which can be used for making inferences and predictions.

He said, “SSL for graphs has important applications in many fields. For example, it can be used for predicting the function of and interactions between proteins in the field of bioinformatics. In social networks, it can be used for making inferences on users, such as predicting whether a user is acting in a malicious manner. In recommendation systems, it can be used for generating recommendations for users. In short, SSL for graphs can be applied to many areas in which graph data is present and labels are sparse. Our model supports the growth of SSL for graphs in applications like these.”

Zhang was drawn to Shrivastava’s interesting problem-solving methods and the wide applicability of his work. “Anshu’s contagious passion for research was balanced by giving us freedom to explore different research areas while focusing on an overarching goal,” he said. “The research in our group is not only novel and interesting to the research community, but it is also practical and applicable for the industry.

“Anshu has taught me many important lessons, but his advice to focus on fundamental algorithmic research had the greatest impact and led to my current research in developing efficient software systems using fundamental tools of hashing.”

From Research to Production: Towards Scalable and Sustainable Neural Recommendation Models on Commodity CPU Hardware

Shrivastava presented the next 2023 paper coming out of his lab at the September ACM Conference on Recommender Systems (RecSys) in Singapore. The paper evolved as ThirdAI and Rice researchers pondered how to make industrial-style recommender systems more affordable and accessible. To this end, they developed efficient neural recommendation models that can be trained and deployed on commodity CPU machines.

Watch the Rice University Department of Computer Science website for news of the next Shrivastava Lab papers, which will be presented at NeurIPS in December 2023.