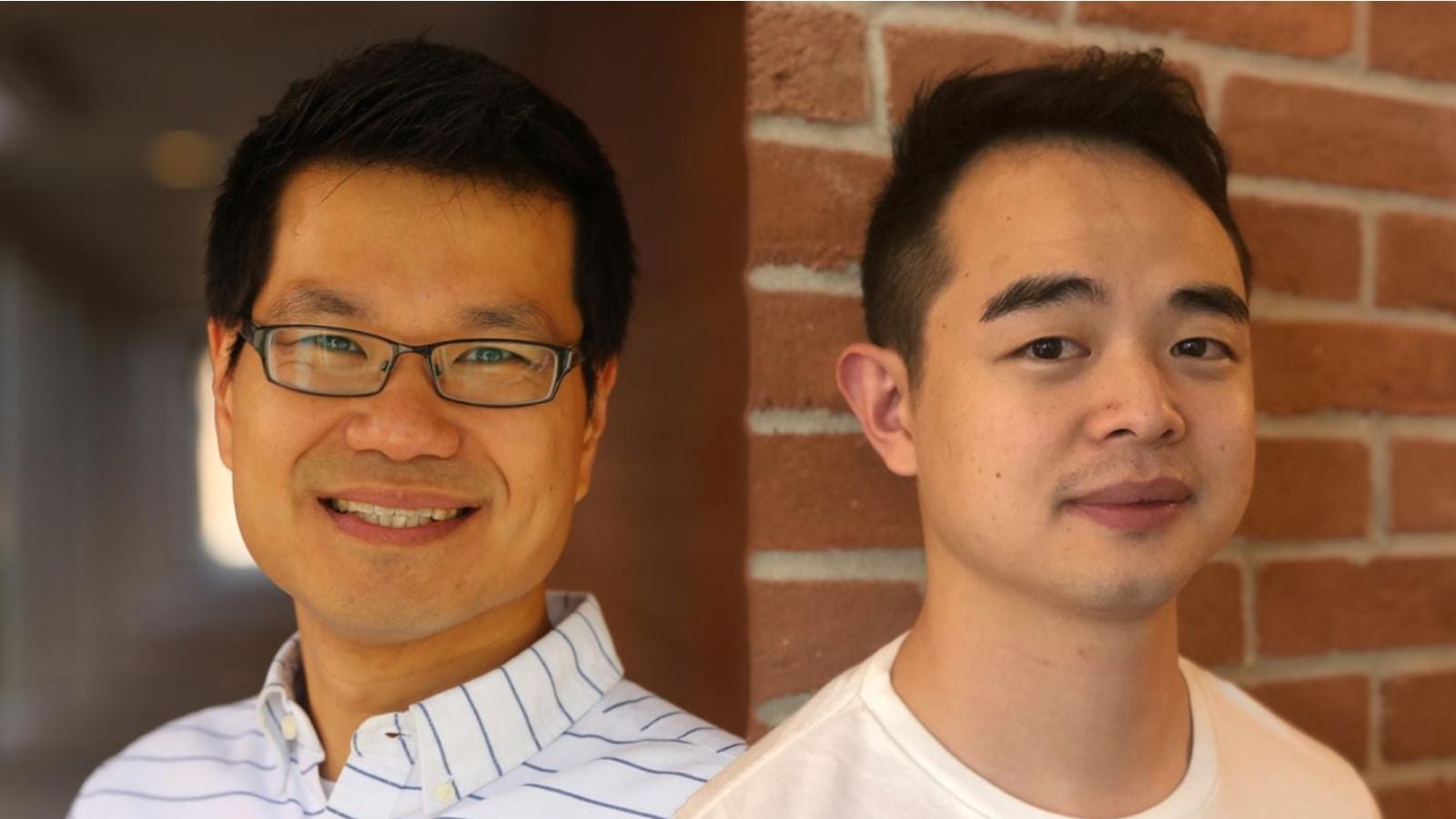

Rice University Computer Scientists T. S. Eugene Ng and Zhuang Wang and their Amazon Web Services colleagues have developed a method for speeding up the recovery time for large language model (LLM) training due to software or hardware failures. Their system, Gemini, will be revealed in Koblenz, Germany at the 29th ACM Symposium on Operating Systems Principles (SOSP), October 23-26, 2023.

“Gemini is a distributed training system that leverages CPU memory to achieve fast failure recovery in LLM training via high checkpoint frequency and prompt checkpoint retrieval,” said Wang.

Rice Professor for Computer Science and Electrical and Computer Engineering Ng said, “Training an extremely large model like LLM that takes months using thousands of computers is a major investment, and like any other major investment, we must protect it. Checkpointing is a method that protects this investment from inevitable computer system failures that can be caused by both hardware and software errors. This protection should be instantaneous and transparent without disrupting the primary objective of model training, but existing solutions do not satisfy this requirement. Our work on Gemini represents a new approach that achieves ideal protection.”

It has been a busy three months for Wang. He co-first-authored the Augmented Queue paper presented at SIGCOMM 2023 in New York, defended his CS Ph.D. dissertation at Rice, and moved to Seattle to join the AWS AI team as an applied scientist. Taking a break to answer questions about their Gemini project, Wang began by explaining how a checkpoint is used in learning systems.

Checkpoint is similar to saved progress in a video game

“A checkpoint is a snapshot of the model states in a learning system at a specific point in time. It consists of the weights of parameters and the optimizer states, with which the learning system can be recovered from either software or hardware failures. As an analogy, a checkpoint is like the saved progress of a video game and it enables users to resume their progress without restarting the game from the very beginning,” said Wang.

Learning systems for LLMs need to periodically checkpoint the model states and the checkpoint frequency determines the costs for recovering training from failures. However, achieving high checkpoint frequency poses significant challenges because the checkpoint size is extremely large – over 1 TB – due to the huge number of parameters in LLM that can exceed 100 billion.

Storing checkpoint data remotely slows model training

Wang said, “Existing approaches store the checkpoints in a remote persistent storage system. However, their checkpoint frequency is limited by the low network bandwidth of remote persistent storage systems connecting to the GPU (graphics processing unit) machines because it can take a very long time –over an hour in some cases-- to transmit each checkpoint to the remote storage system. When recovering training, the loaded model states are saved a few hours ago, causing a significant loss of training progress.

“Moreover, in case of a failure, it also takes a long time for the learning system to retrieve the saved checkpoint from the remote storage system, during which all GPUs must remain idle as they wait for the completion of the checkpoint retrieval. Several-hours of GPU resources are wasted with each failure. When we consider that a learning system can have thousands of GPUs and suffer from hundreds of failures, it is easy to see how hundreds of thousands of GPU hours are wasted during failure recovery.”

Fast failure recovery with in-memory checkpoints

Their solution, Gemini, leverages existing dynamic random access memory (DRAM) resources on the GPU machines participating in the learning system for checkpoint storage. Because the bandwidth between the GPUs and DRAM is much higher than the bandwidth between GPU machines and the remote persistent storage system, Gemini can reduce both the checkpoint time and retrieval time by more than 100x.

“We demonstrated that Gemini can achieve the optimal checkpoint frequency for LLMs with 100 billion parameters. Essentially, Gemini can checkpoint the model states for every iteration during the training without incurring overhead on training throughput,” said Wang.

“As an analogy, assume you are playing a difficult video game that has many levels and each level lasts several hours. Existing approaches only allow users to save their progress at the end of each level. When a player character dies in the middle of a level, users have to restart the game from the beginning of that level. In contrast, Gemini allows the game to automatically save the users’ progress for every minute and it has no negative impact on user experience.”

With a grin, Wang switches from an analogy to a pun. “Gemini is a game changer,” he said.

“When it comes to fault tolerance for LLM training, it reduces the failure recovery overhead from several hours to a few minutes by automatically checkpointing the model states for every iteration to existing DRAM resources in a learning system.”

Ng applauded his Ph.D. student’s persistent research, culminating in Wang’s role as the first author of a paper presented at the flagship operating systems conference.

“Zhuang has provided a very impressive level of technical leadership throughout this project -- from problem analysis, intricate solution design decisions, all the way to prototyping -- so much so that our AWS colleagues were eager to recruit Zhuang to join their team permanently after his graduation,” said Ng.