Led by Anastasios Kyrillidis, the Optimization Lab (OptimaLab) in Rice University’s Computer Science Department specializes in optimizing — increasing the efficiency of — very complicated calculations. In collaboration with other Rice Computer Science and Electrical and Computer Engineering researchers, Kyrillidis secured a competitive grant funded by the National Science Foundation and Intel to develop a new class of distributed neural network training algorithms, called Independent Subnetwork Training or IST.

The OptimaLab IST work began quietly during the height of the pandemic, then quickly gathered steam — producing four papers within 15 months of the award announcement.

Kyrillidis said, “From a high-level perspective, many recent successes in machine learning can be attributed to the development of neural networks (NNs). However, this success comes at a prohibitive cost: to obtain better ML models, larger and larger NNs need to be trained and deployed.”

Bigger is not better when it comes to mobile ML applications where model training and inference are carried out in a timely fashion on devices and platforms that are light on computation, communication, and energy resources like handheld devices, drones, and ‘edge’ infrastructure.

“Now we are looking at running sophisticated and resource-heavy applications across diverse and still-developing edge networks, over mobile devices that are unreliable, delivered through wireless networks with known communication bottlenecks,” said Kyrillidis.

“This project will address those challenges by investigating a new paradigm for computation- and communication-light, energy-limited distributed NN learning. Success in this project will produce fundamental ideas and tools that will make mobile (and beyond) distributed learning practical, and that is why both the NSF and Intel are interested in funding our research.”

The research may also have applications for the Internet of Things, where small devices use sensors to communicate data about their environment over low bandwidth infrastructure. The devices are frequently small and energy efficient, but demand for the data they provide is increasing, so researchers are exploring ways to train them for more robust machine learning capacity by using graphics cards called GPUs.

“A more familiar distributed training method may be the spell checker or word prompts generated as by mobile phone users type an email or text,” said Kyrillidis.

“There are different ways to approach this kind of machine learning, but essentially email and text writers are training their phone’s software as they type. All those training ‘lessons’ are connected and used to improve the overall performance of the software, but your OS provider cannot anticipate everyone’s unique word choices so the devices have to handle some of the training on their end.”

No one wants their phone to heat up while it’s trying to predict a word or spell-correct a text, so the training must run on small parts with a low profile. Delegating parts of the training to individual remote devices is similar to the distributed method used in independent subnetwork training (IST). At Rice, the NSF-Intel funded IST optimization research is developing methods to train small subnetworks over and over until the full training model has absorbed all those lessons globally.

Diving further into the OptimaLab research, Kyrillidis said, “Our IST decomposes a large NN into a set of independent subnetworks. Each of those subnetworks is trained at a different device, for one or more back propagation steps, before a synchronization step. In the synchronization, updated subnetworks are sent from edge-devices to the parameter server for reassembly into the original NN before the next round of decomposition and local training begins.

“Because the subnetworks share no parameters, synchronization requires no aggregation — it is just an exchange of parameters. Moreover, each of the subnetworks is a fully operational classifier by itself; no synchronization pipelines between subnetworks are required.”

For this novel distributed training method, the key benefits are fewer training parameters for each mobile node per iteration, which significantly reduces the communication overhead, and each device trains a much smaller model, resulting in less computational costs and better energy consumption. The lower training parameters and smaller models with their related resource savings provide good reasons to expect the IST will scale much better than more traditional algorithms for mobile applications.

The IST contributors span departments and universities. Now a postdoctoral researcher at ETH Zurich, Binhang Yuan led one of the first NSF-Intel grant funded papers while wrapping up his Ph.D. at Rice under the direction of Chris Jermaine with Kyrillidis as a co-adviser. Kicking off the Rice IST research, the paper on distributed learning of deep NNs using IST was co-authored by Jermaine and Kyrillidis and graduate students Yuxin Tang, Chen Dun, and Cameron Wolfe. This paper was recently presented at the 2022 VLDB (very large databases).

Additional papers presented at the 2022 UAI (Uncertainly in Artificial Intelligence) conference and accepted at the TMLR (Transactions on Machine Learning) Journal by Dun, Wolfe, and Jasper Liao advanced different aspects of the IST research. Yingyan Lin, an assistant professor in Rice’s ECE Department, has focused her efforts on efficient AI on hardware (with a special focus on efficient compressed NN training and inference, as well as pruning techniques for compression), and Rice CS alumnus Qihan Chuck Wang (BS '21, MS '22) is working on how the IST ideas can be combined with other established compression tools, like sparsity in neural networks and pruning for more training efficiency in a distributed manner.

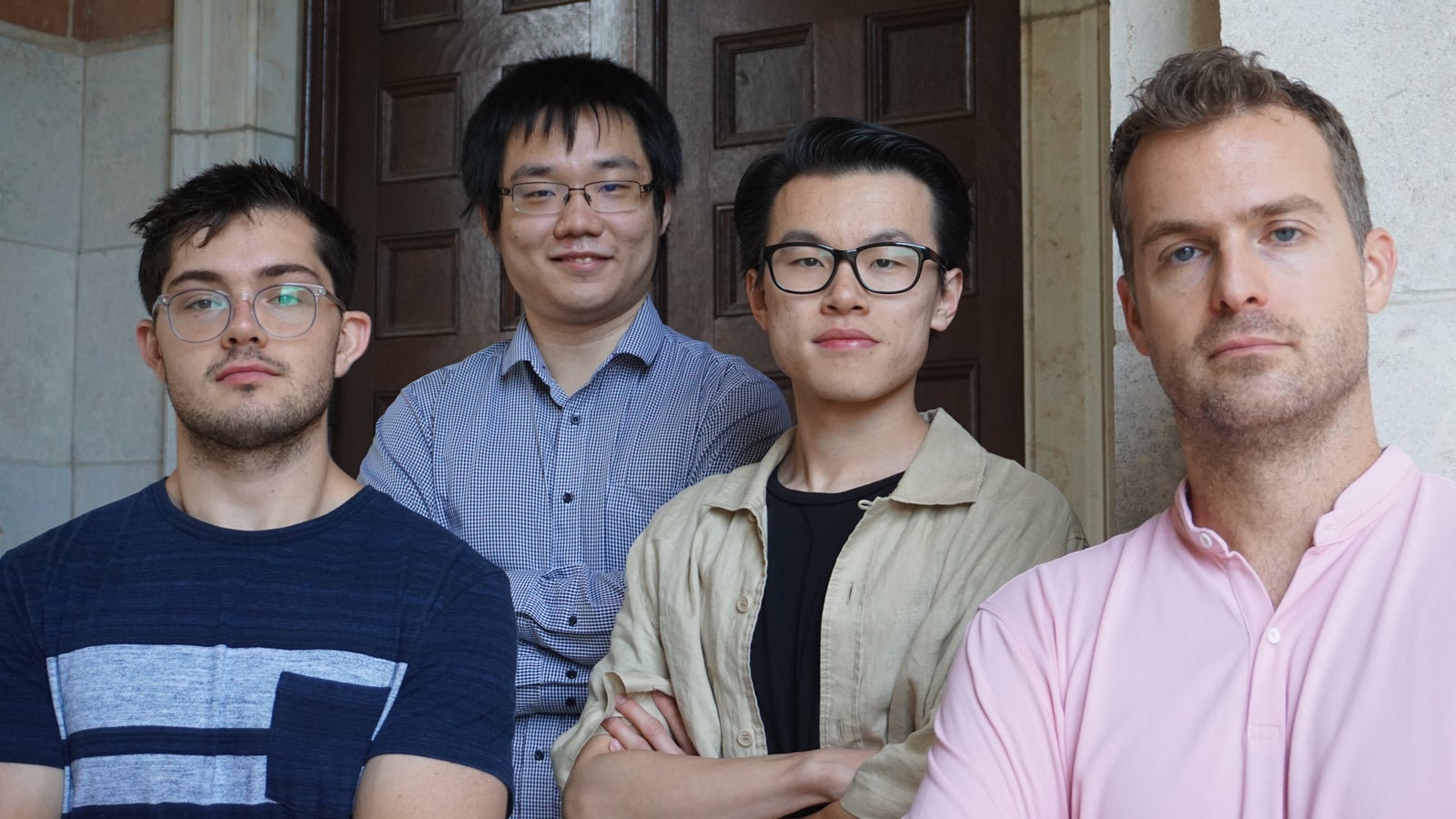

Photo caption: Under the direction of Anastasios Kyrilldis (right), OptimaLab members Cameron Wolfe, Chen Dun, and Jasper Liao tackle neural network training algorithms with NSF-Intel Grant.